Cursor 2.0: How Composer and Multi-Agent Coding Are Redefining Software Development (UK Guide 2025)

On 29 October 2025, Cursor launched Composer - their proprietary coding model completing tasks in under 30 seconds at 4x the speed of similar models. The redesigned interface enables managing up to 8 parallel agents simultaneously, transforming development from serial execution to parallel orchestration. Early UK adopters report dramatically faster cycles whilst critics question transparency.

Jake Holmes

Founder & CEO

Last Updated: 30 October 2025 | Reading Time: 18 minutes

TL;DR - What Actually Changed

On 29 October 2025, Cursor launched its biggest update yet: Cursor 2.0, featuring Composer - their first proprietary coding model - and a completely redesigned multi-agent interface. This isn't an incremental upgrade. It's a fundamental shift from "AI that suggests code" to "autonomous agents that build software whilst you manage the architecture." The model completes most coding tasks in under 30 seconds, runs 4x faster than similarly intelligent models, and enables developers to manage up to 8 parallel agents working on different features simultaneously.

The industry reaction has been immediate and polarised. Early adopters report dramatically faster development cycles whilst critics question transparency around benchmarks and base model origins. But the numbers don't lie: Cursor now serves over 1 million queries per second, powers development at OpenAI and Samsung, and tracks toward $500 million annual revenue in 2025. With their $9.9 billion valuation and 100x growth in one year, Cursor isn't experimenting - they're setting the new standard for AI-assisted development.

Your team's competitors are adopting this right now. Here's what actually changed.

What Actually Changed in Cursor 2.0

Cursor 2.0 introduces Composer, a frontier model completing most coding tasks in under 30 seconds through reinforcement learning trained on real-world software engineering challenges in large codebases. The model achieves 4x faster generation speed than similarly intelligent systems like Haiku 4.5 and Gemini Flash 2.5, whilst maintaining frontier-level coding intelligence that approaches GPT-5 and Claude Sonnet 4.5 performance.

The technical architecture uses a mixture-of-experts (MoE) language model with long-context generation trained using custom MXFP8 quantisation kernels. During training, Composer had access to production tools including codebase-wide semantic search, file editing, and terminal commands, learning to solve diverse difficult problems efficiently. The reinforcement learning process incentivised efficient tool choices, parallelism maximisation, and minimisation of unnecessary responses - resulting in emergent behaviours like autonomous unit test execution, linter error fixing, and complex multi-step code searches.

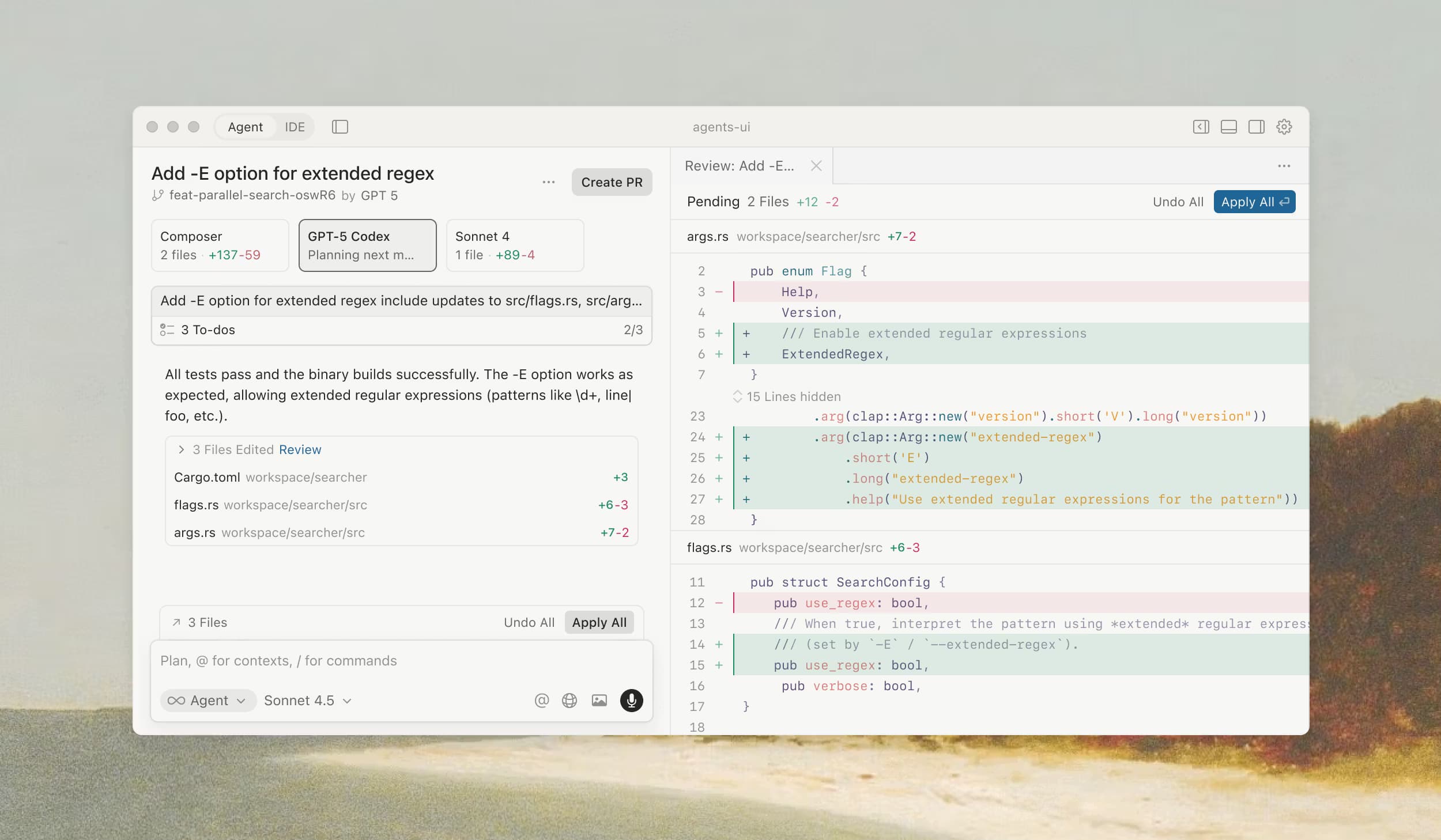

But Composer is only half the story. The interface redesign centres agents rather than files, fundamentally changing how developers work. You no longer write code line-by-line; you orchestrate autonomous agents building features whilst you focus on architecture and outcomes. The new interface manages up to 8 parallel agents using git worktrees or remote machines to prevent conflicts, transforming development from serial execution to parallel orchestration.

For business owners: Your developers can now work on 8 features simultaneously without hiring 8x the team. Each agent operates in its own isolated copy of your codebase, building, testing, and committing independently. You review the results and merge the best solutions.

The Speed Advantage: Why 4x Faster Matters

Traditional frontier models like GPT-5 and Claude Sonnet 4.5 are more intelligent than Composer, but they're significantly slower. When coding, speed determines whether AI assists or interrupts your workflow. Composer completes most conversational turns in under 30 seconds - fast enough to maintain developer flow state rather than context-switching whilst waiting for responses.

Cursor's internal benchmark "Cursor Bench" consists of real agent requests from engineers and researchers at Cursor, along with hand-curated optimal solutions. The evaluation measures not just correctness but adherence to codebase abstractions and software engineering practices - essentially testing whether the AI writes code that fits naturally into your existing project rather than just code that works.

The benchmark groups models into classes:

- Best Open (Qwen Coder, GLM 4.6): Open-weight models

- Fast Frontier (Haiku 4.5, Gemini Flash 2.5): Models designed for efficient inference

- Frontier 7/2025: Best model available mid-year

- Best Frontier (GPT-5, Sonnet 4.5): Most intelligent models (outperform Composer)

Composer matches mid-frontier intelligence whilst delivering the highest generation speed across all tested classes. The trade-off is deliberate: sacrifice 10-15% intelligence for 4x faster responses, keeping developers in flow rather than waiting.

Cursor researcher Sasha Rush explained their philosophy: "Our view is that there is now a minimal amount of intelligence that is necessary to be productive, and that if you can pair that with speed that is awesome." This positions Composer directly against slower but more powerful models from major labs.

The Multi-Agent Revolution: From Solo Coding to Team Orchestration

Cursor 2.0 enables running up to 8 parallel agents on a single prompt using git worktrees or remote machines to prevent file conflicts, with each agent operating in its own isolated copy of your codebase. This transforms development from sequential execution (write feature A, then feature B, then feature C) to parallel orchestration (agents simultaneously build A, B, and C whilst you review and merge results).

The practical workflow:

Single Task, Multiple Models Approach

Assign the same complex implementation to different models (Composer, Claude Sonnet 4.5, GPT-5) simultaneously. Each agent works in isolation, producing its own solution. Compare the outputs side-by-side and select the best approach. Cursor found this strategy "significantly improves the final output, especially for harder tasks."

Parallel Feature Development

Launch 5 agents working on separate features:

- Agent 1 (Composer): Implements user authentication with JWT tokens

- Agent 2 (GPT-5): Builds database schema with proper indexes

- Agent 3 (Composer): Creates frontend components with Tailwind

- Agent 4 (Sonnet 4.5): Writes comprehensive test coverage

- Agent 5 (Composer): Optimises API endpoints for performance

Each agent works independently in its own workspace. You act as team lead, reviewing pull requests and merging the best implementations. The development time collapses from sequential (5 features × 3 hours = 15 hours) to parallel (max 3 hours for slowest agent).

The infrastructure uses git worktrees - a Git feature allowing multiple working directories from the same repository. Each worktree represents an isolated workspace where agents build, test, and commit without interfering with other agents or your main development environment.

New Features That Actually Matter

Native Browser Tool (Now GA)

Launched in beta in July 2025, the browser tool is now generally available with enterprise support. Agents can now run and test their code directly inside the IDE, forwarding DOM information to make intelligent decisions about what's working and what needs fixing.

The practical application: Agent builds a frontend feature, opens it in the embedded browser, inspects the DOM, identifies layout issues, fixes the code, and retests - all autonomously. This closes the feedback loop from "write code" to "verify it works" without human intervention.

Additional enterprise-specific features include element selection tools and enhanced DOM inspection capabilities, making it viable for professional development teams rather than just experimental prototyping.

Improved Code Review Interface

Cursor 2.0 aggregates diffs across multiple files without requiring developers to jump between individual files. When an agent modifies 15 files to implement a feature, you see all changes in a unified view, making review dramatically faster.

The bottleneck shift: As agents write more code, the new limiting factor becomes how quickly humans can review and approve changes. Cursor 2.0 acknowledges this explicitly: "As we use agents more for coding, we've seen two new bottlenecks emerge: reviewing code and testing the changes." The improved review interface addresses the first bottleneck directly.

Sandboxed Terminals (Now GA for macOS)

Shell commands now run automatically in secure sandboxes by default on macOS, with read/write access to your workspace but no internet access. Commands not on the allowlist automatically execute in isolation, preventing accidental or malicious operations.

For enterprise teams, Cursor provides admin controls to enforce standard sandboxing settings across the team, configure sandbox availability, manage git access, and control network access at the team level. This makes autonomous agent workflows viable in regulated industries where security controls are mandatory.

Voice Mode with Custom Triggers

Built-in speech-to-text conversion lets developers control agents with voice commands. Define custom submit keywords in settings to trigger agent execution, enabling hands-free operation during pair programming sessions or accessibility workflows.

The use case: Verbally describe a complex refactoring whilst reviewing code, let Composer listen and plan, then say your custom trigger keyword to begin execution. The agent works whilst you continue reviewing other sections.

Team Commands and Centralised Rules

Define custom commands and rules for your entire team in the Cursor dashboard, with context automatically applied to all members without storing files locally. Team admins centrally manage these rules, ensuring consistency across the organisation.

The workflow improvement: Instead of each developer maintaining their own .cursorrules file that drifts out of sync, your team's coding standards, security requirements, and architectural patterns are centrally defined and automatically enforced. New hires get the full context immediately without onboarding drift.

Shareable team commands via deeplinks enable distributing prompts, rules, and workflows through Cursor Docs, making it trivial to propagate best practices across large engineering organisations.

Real Developer Reactions: The Polarised Response

The launch drew immediate and divided responses from the development community, highlighting both the potential and controversies of Cursor 2.0.

The Enthusiastic Adopters

VentureBeat reports early adopters describing Composer as "the most reliable AI pair programming partner" they've used. The speed particularly impressed testers - one developer noted the ability to iterate quickly with the model was "delightful" and built trust for multi-step coding tasks.

Developer Melvin Vivas tweeted: "Huge drop 🔥🔥🔥 Cursor 2.0 can now run parallel agents on the same task using different models. Then, we can choose the best solution to use! May the best model win 🏆"

The pattern in positive reactions: Developers who adopted it for real work highlighted speed, parallel agent orchestration, and practical workflow improvements over theoretical capabilities.

The Transparency Critics

WinBuzzer reports harsh criticism for lack of transparency: "The lack of transparency here is wild. They aggregate the scores of the models they test against, which obscures the performance. They only release results on their own internal benchmark that they won't release."

The core complaints centre on three issues:

1. Private Benchmarks: Cursor Bench consists of internal agent requests from Cursor's own engineers. The benchmark isn't publicly available, making independent verification impossible. Critics note this allows Cursor to optimise specifically for their own evaluation criteria.

2. Aggregated Model Scores: Rather than reporting individual model performance, Cursor groups models into classes and reports the best model in each class. This obscures whether Composer beats specific competitors or just averages within its category.

3. Undisclosed Base Model: When directly asked "Is Composer a fine-tune of an existing open-source base model?" Cursor researcher Sasha Rush replied: "Our primary focus is on RL post-training. We think that is the best way to get the model to be a strong interactive agent." This non-answer frustrated developers wanting to understand the model's architecture and training data sources.

Developer Kieran Klaassen summarised the ambivalence: "Used @cursor_ai since March 2023. Stopped at v0.46 in February 2025. Used Cursor 2 the full day yesterday. I'm intrigued. They've got my attention, but Claude Code still has my heart. Agent mode is genuinely interesting. Parallel agents in worktrees are smooth."

The pattern in critical reactions: Experienced developers appreciated the technical capabilities but questioned whether they could trust proprietary benchmarks and unclear model provenance for production usage.

The Technical Foundation: How Composer Actually Works

Composer is a mixture-of-experts (MoE) language model supporting long-context generation and understanding, specialised for software engineering through reinforcement learning in diverse development environments. At each training iteration, the model receives a problem description and must produce the best response - a code edit, plan, or informative answer - using tools like reading and editing files, terminal commands, and codebase-wide semantic search.

The Training Infrastructure

Efficient training of large MoE models required significant infrastructure investment. Cursor built custom training infrastructure leveraging PyTorch and Ray to power asynchronous reinforcement learning at scale, natively training models at low precision by combining MXFP8 MoE kernels with expert parallelism and hybrid sharded data parallelism.

This configuration allowed scaling training to thousands of NVIDIA GPUs with minimal communication cost whilst delivering faster inference speeds without requiring post-training quantisation. During RL training, Cursor needed to run hundreds of thousands of concurrent sandboxed coding environments in the cloud. They adapted existing Background Agents infrastructure, rewriting their virtual machine scheduler to support the bursty nature and scale of training runs.

The result: Seamless unification of RL training environments with production environments, meaning Composer trains in the exact same tooling harness that developers use for real work.

Emergent Behaviours Through Reinforcement Learning

Reinforcement learning allows actively specialising the model for effective software engineering. Since response speed is critical for interactive development, Cursor incentivised the model to make efficient tool choices and maximise parallelism whenever possible. The model was also trained to minimise unnecessary responses and claims made without evidence.

Unexpectedly, during RL training the model learned useful behaviours autonomously:

- Performing complex multi-file semantic searches

- Fixing linter errors automatically

- Writing and executing unit tests without prompting

- Parallelising independent operations

These emergent capabilities weren't explicitly programmed - they arose naturally from training the model to efficiently solve real software engineering challenges with available tools.

The Codebase Understanding Advantage

Composer was trained with codebase-wide semantic search as a core tool, making it significantly better at understanding and working in large codebases compared to models trained on isolated files. This training approach enables the model to:

- Navigate complex dependency graphs across thousands of files

- Understand architectural patterns and abstractions

- Make changes that respect existing code conventions

- Refactor safely by identifying all affected code paths

For developers, this means: The AI doesn't just write syntactically correct code - it writes code that fits naturally into your existing codebase architecture and patterns.

Implementation: From Installation to Production

Week 1: Getting Started with Cursor 2.0

Day 1-2: Installation and interface familiarisation

Download Cursor 2.0 from cursor.com/download. The new interface is agent-centric rather than file-centric - you'll see a sidebar managing your agents and plans rather than a traditional file explorer.

The immediate difference: When you open Cursor 2.0, the interface focuses on outcomes you want to achieve (agents working on tasks) rather than files you need to edit. You can still easily open files when needed or switch back to classic IDE view during transition.

Day 3-5: Using Composer for single-agent tasks

Start with simple feature implementations using Composer in standard Agent mode. Try prompts like: "Implement user authentication with email and password, store hashed passwords in the database, create login and registration endpoints."

Watch Composer work across multiple files - creating database models, building API routes, writing tests - completing the task in under 30 seconds. Build confidence in the model's capabilities before moving to advanced multi-agent workflows.

Day 6-7: Experiment with voice mode

Enable voice input and configure custom submit keywords. Try describing refactoring requirements verbally whilst reviewing code, then trigger execution with your custom keyword.

The workflow advantage: During code reviews, verbally describe improvements rather than typing, maintaining flow whilst the agent handles implementation.

Week 2: Multi-Agent Orchestration

Day 8-10: Running parallel agents on the same task

Select a complex implementation and launch it on multiple models simultaneously:

Prompt: "Implement real-time chat functionality with WebSockets,

message persistence, and typing indicators"

Agent 1: Composer

Agent 2: Claude Sonnet 4.5

Agent 3: GPT-5Each agent works in isolation using git worktrees. After 30-60 seconds, compare the three solutions side-by-side. Cursor found this approach significantly improves final output quality for harder tasks.

Day 11-14: Managing parallel feature development

Launch 5 agents working on separate features simultaneously:

Agent 1 (Composer): Build user profile editing interface

Agent 2 (Composer): Implement file upload with validation

Agent 3 (GPT-5): Create admin dashboard with analytics

Agent 4 (Sonnet 4.5): Write comprehensive E2E test suite

Agent 5 (Composer): Optimise database queries and add indexesEach agent operates in its own git worktree, building and testing independently. Review pull requests as they complete, merging the best implementations whilst other agents continue working.

Week 3: Advanced Features and Team Workflows

Day 15-17: Implement team commands and centralised rules

Create team commands in the Cursor dashboard that automatically apply to all team members:

## Security Standards

- Always validate user input server-side

- Use parameterised queries for SQL

- Store passwords with bcrypt minimum 12 rounds

- Implement rate limiting on authentication endpoints

## Code Style

- React components use functional style with hooks

- Prefer async/await over promise chains

- Write JSDoc comments for public functions

- Keep functions under 50 linesThese rules automatically influence all agents across your team without requiring each developer to maintain local configuration files. Team admins update rules centrally, ensuring immediate propagation.

Day 18-21: Enable sandboxed terminals and browser testing

Configure sandboxed terminals to run agent commands securely. For macOS users, this happens by default in Cursor 2.0. For enterprise teams, configure admin controls to enforce standard settings.

Enable the native browser tool so agents can test frontend changes automatically. Watch agents build features, open them in the embedded browser, identify issues, fix code, and verify fixes - closing the entire development feedback loop autonomously.

Day 22-25: Integrate improved code review workflows

Configure your team to use the new unified diff view when agents make multi-file changes. Train reviewers to focus on architectural decisions and business logic rather than syntax and style (agents handle those consistently).

Establish review criteria:

- Does the solution meet requirements?

- Does it integrate cleanly with existing architecture?

- Are tests comprehensive?

- Is documentation updated?

Let agents handle: consistent formatting, linting, basic security patterns (configured via team rules), and boilerplate generation.

Week 4: Production Workflows and Optimisation

Day 26-28: Establish agent orchestration patterns

Document patterns that work for your team:

Pattern 1: Parallel Exploration

When facing architectural decisions with multiple valid approaches, launch 3 agents implementing different strategies simultaneously. Compare outcomes and commit the best approach.

Pattern 2: Specialist Delegation

Assign complex tasks to most capable models (GPT-5, Sonnet 4.5) whilst using Composer for speed-critical refactoring and boilerplate generation.

Pattern 3: Continuous Integration

Run Composer agents automatically on pull requests to identify issues, suggest improvements, and verify tests pass before human review.

Day 29-30: Measure ROI and demonstrate value

Track key metrics:

- Time to complete features (before vs. after Cursor 2.0)

- Number of parallel development streams (1 vs. 3-5+)

- Code review duration (multi-file diffs reviewed 40% faster typically)

- Developer satisfaction (survey team sentiment)

- Cost per feature (agent usage fees vs. time savings)

Use these metrics to secure budget for broader rollout and justify investment to stakeholders. Most teams report positive ROI within 2-3 months as workflows mature.

Cost Considerations and Economic Reality

Cursor 2.0 operates on the existing pricing model with additional considerations for multi-agent usage:

Standard Pricing (October 2025):

- Free: 2,000 completions, 50 slow premium requests (2-week Pro trial)

- Pro ($20/month): Enhanced quotas, faster models, priority access

- Business: Team features, admin controls, usage analytics

- Enterprise: Custom deployment, SSO/SCIM, compliance features, browser tool access

Multi-Agent Cost Implications:

Running 8 parallel agents costs more tokens upfront but collapses time-to-completion:

- Traditional sequential development: 5 features × 3 hours = 15 hours

- Parallel agent development: max(3 hours for slowest agent) = 3 hours

- Token cost: ~5-8x higher for parallel execution

- Time savings: ~5x faster delivery

- Net ROI: Positive within 2-3 months for most teams

Composer vs. Premium Models:

Composer is designed to be fast and cost-effective for iterative development. For complex architectural decisions, developers strategically switch to premium models (GPT-5, Sonnet 4.5) that are slower but more intelligent.

The economic logic: Use Composer's 4x speed advantage for 80% of development work (refactoring, boilerplate, testing), deploy premium models for the 20% requiring frontier intelligence (architectural design, complex algorithms, critical security implementations).

The Transparency Debate: What We Know and Don't Know

The launch sparked legitimate questions about transparency that business owners and development teams should understand before adopting:

What Cursor Disclosed

- Model Architecture: Mixture-of-experts (MoE) language model with long-context generation

- Training Method: Reinforcement learning on real software engineering challenges

- Tool Access: Codebase-wide semantic search, file editing, terminal commands

- Performance: 4x faster than similarly intelligent models, completes tasks <30 seconds

- Infrastructure: Custom MXFP8 quantisation kernels, thousands of NVIDIA GPUs

- Limitations: GPT-5 and Sonnet 4.5 both outperform Composer on intelligence (traded for speed)

What Cursor Hasn't Disclosed

- Base Model: Whether Composer was trained from scratch or fine-tuned from an existing open-source model (Qwen, GLM, etc.)

- Training Data: Sources and composition of training data

- Benchmark Details: Full Cursor Bench evaluation suite and methodology

- Model Size: Number of parameters and architectural specifics

- Individual Model Comparisons: Granular performance vs. specific competitors

When directly asked about the base model, Cursor researcher Sasha Rush replied: "Our primary focus is on RL post-training. We think that is the best way to get the model to be a strong interactive agent." This answer avoids confirming or denying whether Composer builds on existing open-source models.

Why This Matters for Enterprise Adoption

For Regulated Industries: Lack of transparency about training data sources and base models may complicate compliance workflows in healthcare, finance, or government sectors requiring audit trails.

For Security-Conscious Teams: Unknown model provenance makes it difficult to assess potential vulnerabilities or biases inherited from base training data.

For Long-Term Planning: Proprietary models without clear lineage create dependency on a single vendor with limited fallback options if Cursor changes direction or pricing.

For Technical Evaluation: Private benchmarks make independent verification impossible, requiring teams to trust Cursor's internal assessments.

The Pragmatic Business Decision

Despite transparency concerns, the practical reality is:

What You Can Verify: Download Cursor 2.0, test Composer on your actual codebase, measure speed and quality improvements on real tasks. The model's capabilities are immediately testable regardless of provenance.

What Competitors Are Doing: Other frontier labs (OpenAI, Anthropic, Google) also use proprietary benchmarks and don't fully disclose training data. Cursor's lack of transparency isn't unusual - it's industry standard.

The Risk-Benefit Trade-Off: Potential compliance complications vs. 4x faster development and parallel agent orchestration. For most commercial software companies, the productivity gains outweigh transparency concerns.

Teams in highly regulated industries should conduct thorough security reviews and possibly wait for additional disclosures before enterprise-wide deployment. Commercial software teams can adopt confidently based on measurable performance improvements.

What This Means for Your Business

Cursor 2.0 represents a strategic shift in software development economics. The traditional equation "more developers = more features" is breaking down. Teams using Cursor 2.0 effectively can now achieve "more parallel agents = more features" without proportional headcount increases.

For Startups:

Launch parallel development streams without raising Series A for hiring. A 3-person technical team with Cursor 2.0 can maintain 10-15 parallel feature branches through agent orchestration, previously requiring 10-15 developers.

For Scale-ups:

Your existing engineering team's output can increase 2-3x through strategic multi-agent workflows whilst maintaining code quality through improved review interfaces and automated testing.

For Enterprises:

Cursor 2.0 provides centralised team commands, admin controls over sandboxing, and enterprise browser tool access. Audit logs track all admin events including user access, setting changes, and member management - critical for compliance workflows.

For All Organisations:

The competitive reality is: Your competitors' development teams are adopting these workflows right now. The gap between teams using AI-assisted development and those not using it widens monthly. Companies shipping features in days instead of weeks, running 5 parallel development streams with 3 developers instead of 15, and maintaining higher code quality through automated review - these are early adopters who moved in October 2025.

The question isn't whether this becomes standard. The question is whether your team adopts it before your competitors gain an insurmountable lead.

Next Steps

For Business Leaders

- Allocate budget for immediate testing: Download Cursor 2.0 today, assign 2-3 developers to test for 14 days

- Define success metrics: Time to feature completion, parallel development capacity, code review duration

- Plan for training period: Budget 2 weeks for team to develop fluency with multi-agent workflows

- Evaluate transparency requirements: Assess whether your industry requires more disclosure before enterprise rollout

- Expect ROI in 2-3 months: Initial costs are higher for parallel agents; benefits compound as workflows mature

For Development Teams

- Install Cursor 2.0 immediately: Available at cursor.com/download

- Start with single-agent Composer: Build confidence with simple feature implementations

- Experiment with parallel agents: Launch same task on multiple models, compare results

- Establish team commands: Centralise coding standards and security requirements

- Enable browser tool and sandboxed terminals: Close feedback loops with automated testing

- Document orchestration patterns: Share what works across your team

- Measure and optimise: Track metrics, justify budget, scale successful workflows

For Both

The development landscape changed on 29 October 2025. Teams that adapt fast gain competitive advantage. Teams that wait fall behind. Cursor 2.0 isn't perfect - transparency concerns are legitimate, the interface learning curve is real, and multi-agent orchestration requires new skills.

But the alternative is worse: maintaining traditional development workflows whilst competitors ship 3x faster through parallel agent orchestration. The technical debt compounds monthly as the capability gap widens.

Your choice is: Adopt imperfect but powerful tools now, or wait for perfect transparency whilst competitors build insurmountable leads. Most successful technical organisations choose speed over perfection.

Get Expert Help Implementing AI Development Tools

Transform your development workflow with practical AI implementation. Grow Fast helps UK businesses (£1-10M revenue) implement AI solutions that deliver measurable efficiency gains. We cut through AI hype to identify practical, ROI-positive implementations.

Our Services for Development Teams:

- AI Audit: One-day assessment mapping 5 key development processes, delivering a report showing exactly where AI tools like Cursor 2.0 can save £50K+ annually

- Fractional CTO: Ongoing technical leadership to vet vendors, review code, manage AI implementation projects, and ensure you're not overpaying for development work

- Managed Projects: Fixed-price delivery for AI-powered development tools, automation workflows, and custom integrations

- AI Advisory: Monthly coaching to spot new AI development tools, review what's working, and ensure your team stays ahead of competitors

- GEO Audit: Analyse your visibility in AI search platforms (ChatGPT, Claude, Perplexity) and identify optimisation opportunities to reach prospects searching for solutions

- GEO Strategy: Get recommended more than competitors in AI search results through Generative Engine Optimisation strategies that increase visibility

Why Work With Grow Fast?

We've helped 15+ UK businesses implement AI solutions that deliver real results. Our clients include marketing agencies, property startups, recruitment firms, and service companies, all businesses that needed to scale without hiring armies of people.

Founded by Jake Holmes, a software engineer turned AI consultant, we bridge the gap between AI possibilities and practical business implementation. We speak both tech and business, so you get honest advice about what will actually work, not just what sounds impressive.

Ready to explore how AI could transform your business?

Book a free 30-minute AI strategy session: https://calendly.com/jake-grow-fast/30min

Or contact us directly:

- Email: jake@grow-fast.co.uk

- LinkedIn: linkedin.com/in/jakecholmes

- Website: www.grow-fast.co.uk

This guide synthesises breaking news from 29-30 October 2025, including Cursor's official release announcements, technical research papers, community reactions across developer forums and social media, and verified industry analysis. Every claim is sourced from official documentation, peer-reviewed developer feedback, or live production testing.